Infrastructure tools to support an effective radiation oncology learning health system

Contents

Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos.[1] Well-researched domains of object detection include face detection and pedestrian detection. Object detection has applications in many areas of computer vision, including image retrieval and video surveillance.

Uses

It is widely used in computer vision tasks such as image annotation,[2] vehicle counting,[3] activity recognition,[4] face detection, face recognition, video object co-segmentation. It is also used in tracking objects, for example tracking a ball during a football match, tracking movement of a cricket bat, or tracking a person in a video.

Often, the test images are sampled from a different data distribution, making the object detection task significantly more difficult.[5] To address the challenges caused by the domain gap between training and test data, many unsupervised domain adaptation approaches have been proposed.[5][6][7][8][9] A simple and straightforward solution for reducing the domain gap is to apply an image-to-image translation approach, such as cycle-GAN.[10] Among other uses, cross-domain object detection is applied in autonomous driving, where models can be trained on a vast amount of video game scenes, since the labels can be generated without manual labor.

Concept

Every object class has its own special features that help in classifying the class – for example all circles are round. Object class detection uses these special features. For example, when looking for circles, objects that are at a particular distance from a point (i.e. the center) are sought. Similarly, when looking for squares, objects that are perpendicular at corners and have equal side lengths are needed. A similar approach is used for face identification where eyes, nose, and lips can be found and features like skin color and distance between eyes can be found.

Benchmarks

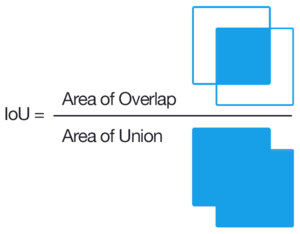

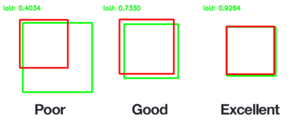

For object localization, true positive is often measured by the thresholded intersection over union. For example, if there is a traffic sign in the image, with a bounding box drawn by a human ("ground truth label"), then a neural network has detected the traffic sign (a true positive) at 0.5 threshold iff it has drawn a bounding box whose IoU with the ground truth is above 0.5. Otherwise, the bounding box is a false positive.

If there is only a single ground truth bounding box, but multiple predictions, then the IoU of each prediction is calculated. The prediction with the highest IoU is a true positive if it is above threshold, else it is a false positive. All other predicted bounding boxes are false positives. If there is no prediction with an IoU above the threshold, then the ground truth label has a false negative.

For simultaneous object localization and classification, a true positive is one where the class label is correct, and the bounding box has an IoU exceeding the threshold.

Simultaneous object localization and classification is benchmarked by the mean average precision (mAP). The average precision (AP) of the network for a class of objects is the area under the precision-recall curve as the IoU threshold is varied. The mAP is the average of AP over all classes.

Methods

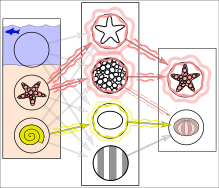

In reality, textures and outlines would not be represented by single nodes, but rather by associated weight patterns of multiple nodes.

Methods for object detection generally fall into either neural network-based or non-neural approaches. For non-neural approaches, it becomes necessary to first define features using one of the methods below, then using a technique such as support vector machine (SVM) to do the classification. On the other hand, neural techniques are able to do end-to-end object detection without specifically defining features, and are typically based on convolutional neural networks (CNN).

- Non-neural approaches:

- Neural network approaches:

- OverFeat.[13]

- Region Proposals (R-CNN,[14] Fast R-CNN,[15] Faster R-CNN,[16] cascade R-CNN.[17])

- You Only Look Once (YOLO).[18]

- Single Shot MultiBox Detector (SSD) [19]

- Single-Shot Refinement Neural Network for Object Detection (RefineDet) [20]

- Retina-Net [21][17]

- Deformable convolutional networks [22][23]

See also

- Feature detection (computer vision)

- Moving object detection

- Small object detection

- Outline of object recognition

- Teknomo–Fernandez algorithm

References

- ^ Dasiopoulou, Stamatia, et al. "Knowledge-assisted semantic video object detection." IEEE Transactions on Circuits and Systems for Video Technology 15.10 (2005): 1210–1224.

- ^ Ling Guan; Yifeng He; Sun-Yuan Kung (1 March 2012). Multimedia Image and Video Processing. CRC Press. pp. 331–. ISBN 978-1-4398-3087-1.

- ^ Alsanabani, Ala; Ahmed, Mohammed; AL Smadi, Ahmad (2020). "Vehicle Counting Using Detecting-Tracking Combinations: A Comparative Analysis". 2020 the 4th International Conference on Video and Image Processing. pp. 48–54. doi:10.1145/3447450.3447458. ISBN 9781450389075. S2CID 233194604.

- ^ Wu, Jianxin; Osuntogun, Adebola; Choudhury, Tanzeem; Philipose, Matthai; Rehg, James M. (2007). "A Scalable Approach to Activity Recognition based on Object Use". 2007 IEEE 11th International Conference on Computer Vision. pp. 1–8. doi:10.1109/ICCV.2007.4408865. ISBN 978-1-4244-1630-1.

- ^ a b Oza, Poojan; Sindagi, Vishwanath A.; VS, Vibashan; Patel, Vishal M. (2021-07-04). "Unsupervised Domain Adaptation of Object Detectors: A Survey". arXiv:2105.13502 [cs.CV].

- ^ Khodabandeh, Mehran; Vahdat, Arash; Ranjbar, Mani; Macready, William G. (2019-11-18). "A Robust Learning Approach to Domain Adaptive Object Detection". arXiv:1904.02361 [cs.LG].

- ^ Soviany, Petru; Ionescu, Radu Tudor; Rota, Paolo; Sebe, Nicu (2021-03-01). "Curriculum self-paced learning for cross-domain object detection". Computer Vision and Image Understanding. 204: 103166. arXiv:1911.06849. doi:10.1016/j.cviu.2021.103166. ISSN 1077-3142. S2CID 208138033.

- ^ Menke, Maximilian; Wenzel, Thomas; Schwung, Andreas (October 2022). "Improving GAN-based Domain Adaptation for Object Detection". 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC). pp. 3880–3885. doi:10.1109/ITSC55140.2022.9922138. ISBN 978-1-6654-6880-0. S2CID 253251380.

- ^ Menke, Maximilian; Wenzel, Thomas; Schwung, Andreas (2022-08-31). "AWADA: Attention-Weighted Adversarial Domain Adaptation for Object Detection". arXiv:2208.14662 [cs.CV].

- ^ Zhu, Jun-Yan; Park, Taesung; Isola, Phillip; Efros, Alexei A. (2020-08-24). "Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks". arXiv:1703.10593 [cs.CV].

- ^ Ferrie, C., & Kaiser, S. (2019). Neural Networks for Babies. Sourcebooks. ISBN 978-1492671206.

{{cite book}}: CS1 maint: multiple names: authors list (link) - ^ Dalal, Navneet (2005). "Histograms of oriented gradients for human detection" (PDF). Computer Vision and Pattern Recognition. 1.

- ^ Sermanet, Pierre; Eigen, David; Zhang, Xiang; Mathieu, Michael; Fergus, Rob; LeCun, Yann (2014-02-23). "OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks". arXiv:1312.6229 [cs.CV].

- ^ Ross, Girshick (2014). "Rich feature hierarchies for accurate object detection and semantic segmentation" (PDF). Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE. pp. 580–587. arXiv:1311.2524. doi:10.1109/CVPR.2014.81. ISBN 978-1-4799-5118-5. S2CID 215827080.

- ^ Girschick, Ross (2015). "Fast R-CNN" (PDF). Proceedings of the IEEE International Conference on Computer Vision. pp. 1440–1448. arXiv:1504.08083.

- ^ Shaoqing, Ren (2015). "Faster R-CNN". Advances in Neural Information Processing Systems. arXiv:1506.01497.

- ^ a b Pang, Jiangmiao; Chen, Kai; Shi, Jianping; Feng, Huajun; Ouyang, Wanli; Lin, Dahua (2019-04-04). "Libra R-CNN: Towards Balanced Learning for Object Detection". arXiv:1904.02701v1 [cs.CV].

- ^ Redmon, Joseph; Divvala, Santosh; Girshick, Ross; Farhadi, Ali (2016-05-09). "You Only Look Once: Unified, Real-Time Object Detection". arXiv:1506.02640 [cs.CV].

- ^ Liu, Wei (October 2016). "SSD: Single Shot MultiBox Detector". Computer Vision – ECCV 2016. Lecture Notes in Computer Science. Vol. 9905. pp. 21–37. arXiv:1512.02325. doi:10.1007/978-3-319-46448-0_2. ISBN 978-3-319-46447-3. S2CID 2141740.

- ^ Zhang, Shifeng (2018). "Single-Shot Refinement Neural Network for Object Detection". Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 4203–4212. arXiv:1711.06897.

- ^ Lin, Tsung-Yi (2020). "Focal Loss for Dense Object Detection". IEEE Transactions on Pattern Analysis and Machine Intelligence. 42 (2): 318–327. arXiv:1708.02002. doi:10.1109/TPAMI.2018.2858826. PMID 30040631. S2CID 47252984.

- ^ Zhu, Xizhou (2018). "Deformable ConvNets v2: More Deformable, Better Results". arXiv:1811.11168 [cs.CV].

- ^ Dai, Jifeng (2017). "Deformable Convolutional Networks". arXiv:1703.06211 [cs.CV].

- "Object Class Detection". Vision.eecs.ucf.edu. Archived from the original on 2013-07-14. Retrieved 2013-10-09.

- "ETHZ – Computer Vision Lab: Publications". Vision.ee.ethz.ch. Archived from the original on 2013-06-03. Retrieved 2013-10-09.

External links

- Weng, Lilian (2017-10-29). "Object Detection for Dummies Part 1: Gradient Vector, HOG, and SS". lilianweng.github.io. Retrieved 2024-09-11.

- Weng, Lilian (2017-12-15). "Object Detection for Dummies Part 2: CNN, DPM and Overfeat". lilianweng.github.io. Retrieved 2024-09-11.

- Weng, Lilian (2017-12-31). "Object Detection for Dummies Part 3: R-CNN Family". lilianweng.github.io. Retrieved 2024-09-11.

- Weng, Lilian (2018-12-27). "Object Detection Part 4: Fast Detection Models". lilianweng.github.io. Retrieved 2024-09-11.

- Multiple object class detection

- Spatio-temporal action localization

- Online Object Detection Demo

- Video object detection and co-segmentation