An overview of data warehouse and data lake in modern enterprise data management

| Full article title | An overview of data warehouse and data lake in modern enterprise data management |

|---|---|

| Journal | Big Data and Cognitive Computing |

| Author(s) | Nambiar, Athira; Mundra, Divyansh |

| Author affiliation(s) | SRM Institute of Science and Technology |

| Primary contact | Email: athiram at srmist dot edu dot in |

| Year published | 2022 |

| Volume and issue | 6(4) |

| Article # | 132 |

| DOI | 10.3390/bdcc6040132 |

| ISSN | 2504-2289 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.mdpi.com/2504-2289/6/4/132 |

| Download | https://www.mdpi.com/2504-2289/6/4/132/pdf (PDF) |

Abstract

Data is the lifeblood of any organization. In today’s world, organizations recognize the vital role of data in modern business intelligence systems for making meaningful decisions and staying competitive in the field. Efficient and optimal data analytics provides a competitive edge to its performance and services. Major organizations generate, collect, and process vast amounts of data, falling under the category of "big data." Managing and analyzing the sheer volume and variety of big data is a cumbersome process. At the same time, proper utilization of the vast collection of an organization’s information can generate meaningful insights into business tactics. In this regard, two of the more popular data management systems in the area of big data analytics—the data warehouse and data lake—act as platforms to accumulate the big data generated and used by organizations. Although seemingly similar, both of them differ in terms of their characteristics and applications.

This article presents a detailed overview of the roles of data warehouses and data lakes in modern enterprise data management. We detail the definitions, characteristics, and related works for the respective data management frameworks. Furthermore, we explain the architecture and design considerations of the current state of the art. Finally, we provide a perspective on the challenges and promising research directions for the future.

Keywords: big data, data warehousing, data lake, enterprise data management, OLAP, ETL tools, metadata, cloud computing, internet of things

Introduction

"Big data analytics" is one of the buzzwords in today’s digital world. It entails examining big data and uncovering any hidden patterns, correlations, etc. available in the data.[1] Big data analytics extracts and analyzes random data sets, forming them into meaningful information. According to statistics, the overall amount of data generated in the world in 2021 was approximately 79 zettabytes, and this is expected to double by 2025.[2] This unprecedented amount of data was the result of a data explosion that occurred, wherein data interactions increased by 5,000 percent since 2010.[3]

Big data deals with the volume, variety, and velocity of data, while seeking the veracity (insightfulness) and value to data. These are known, in part, as the "Vs" of big data.[4] An unprecedented amount of diverse data is acquired, stored, and processed with high data quality for various application domains. These data originate from business transactions, real-time streaming, social media, video analytics, and text mining, creating a huge amount of semi- or unstructured data to be stored in different information silos.[5] The efficient integration and analysis of these multiple data across silos are required to divulge complete insight into the database. This is an open research topic of interest.

Big data and its related emerging technologies have been changing the way e-commerce and e-services operate and have been opening new frontiers in business analytics and related research.[6] Big data analytics systems play a big role in the modern enterprise management domain, from product distribution to sales and marketing, as well as the analysis of hidden trends, similarities, and other insights, giving companies opportunities to optimize their data to find new opportunities within it.[7] Since organizations with better and more accurate data can make more informed business decisions by looking at market trends and customer preferences, they can gain competitive advantages over others. Hence, organizations invest tremendously in artificial intelligence (AI) and big data technologies to strive toward digital transformation and data-driven decision making, which ultimately leads to advanced business intelligence (BI).[6] As per reports, the worldwide big data analytics and BI software applications markets seem as though they will increase by USD 68 billion and 17.6 billion by 2024–2025, respectively.[8]

Big data repositories exist in many forms, as per the requirements of corporations.[9] An effective data repository needs to unify, regulate, evaluate, and deploy a huge amount of data resources to enhance analytics and query performance. Based on the nature and the application scenario, there are many different types of data repositories outside of traditional relational databases. Two of the more popular data repositories among them are enterprise data warehouses and data lakes.[10][11][12]

A data warehouse (DW) is a data repository which stores structured, filtered, and processed data that has been treated for a specific purpose, whereas a data lake (DL) is a vast pool of data for which the purpose is not or has not yet been defined.[9] In detail, DWs store large amounts of data collected by different sources, typically using predefined schemas. Typically, a DW is a purpose-built relational database running on specialized hardware either on the premises of an organization or in the cloud.[13] DWs have been used widely for storing enterprise data and fueling BI and analytics applications.[14][15][16]

Data lakes (DLs) have emerged as big data repositories that store raw data and provide a rich list of functionalities with the help of metadata descriptions.[10] Although the DL is also a form of enterprise data storage, it does not inherently include the same analytics features commonly associated with DWs. Instead, they are repositories storing raw data in their original formats and providing a common access interface. From the lake, data may flow downstream to a DW to get processed, packaged, and become ready for consumption. As a relatively new concept, there has been very limited research discussing various aspects of DLs, especially in internet articles or blogs.

Although DWs and DLs share some overlapping features and use cases, there are fundamental differences in the data management philosophies, design characteristics, and ideal use conditions for each of these technologies. In this context, we provide a detailed overview and differences between both the DW and DL data management schemes in this survey paper. Furthermore, we consolidate the concepts and give a detailed analysis of different design aspects, various tools and utilities, etc., along with recent developments that have come into existence.

The remainder of this paper is organized as follows. In the next section, the terminology and basic definitions of big data analytics and the data management schemes are analyzed. Furthermore, the related works in the field are also summarized. After this summation, the architectures of both the DW and DL are presented, followed by their key design and practical aspects, presented at length. Afterwards, the various popular tools and services available for enterprise data management are summarized, followed by explanations of the open challenges and promising directions for these technologies. In particular, the pros and cons of various methods are critically discussed, and the observations are presented. Finally, conclusions are drawn from this research effort.

Definitions of big data analytics, data warehouse, and data lake

The definitions and fundamental notions of various data management schemes are provided in this section. Furthermore, related works and review papers on this topic are also summarized.

Big data analytics

With significant advancements in technology has come the unprecedented usage of computer networks, multimedia, the internet of things (IoT), social media, and cloud computing.[17] As a result, a huge amount of data, known as “big data,” has been generated. Effective data processing is required to collect, manage, and analyze these data efficiently. The process of big data processing is aimed at data mining (i.e., extracting knowledge from large amounts of data), leveraging data management, machine learning (ML), high-performance computing, statistical charting, pattern recognition, etc. The important characteristics of big data (known as the seven "Vs" of big data) are as follows[18]:

- Volume, or the available amount of data;

- Velocity, or the speed of data processing;

- Variety, or the different types of big data;

- Volatility, or the variability of the data;

- Veracity, or the accuracy of the data;

- Visualization, or the depiction of big data-generated insights through visual representation; and

- Value, or the benefits organizations derive from the data.

Typically, there are mainly three kinds of big data processing possible: batch processing, stream processing, and hybrid processing.[19] In batch processing, data stored in the non-volatile memory will be processed, and the probability and temporal characteristics of data conversion processes will be decided by the requirements of the problems. In stream processing, the collected data will be processed without storing them in non-volatile media, and the temporal characteristics of data conversion processes will mainly be determined by the incoming data rate. This is suitable for domains that require low response times. Another kind of big data processing, known as hybrid processing, combines both the batch and stream processing techniques to achieve high accuracy and a low processing time.[20] Some examples of hybrid big data processing are Lambda and Kappa Architecture.[21] Lambda Architecture processes huge quantities of data, enabling batch processing and stream processing methods with a hybrid approach. Kappa Architecture is a simpler alternative to Lambda Architecture, since it leverages the same technology stack to handle both real-time stream processing and historical batch processing. However, it avoids maintaining two different code bases for the batch and speed layers. The major notion is to facilitate real-time data processing using a single stream-processing engine, thus bypassing the multi-layered Lambda Architecture without compromising the standard quality of service.

Data warehouse

The concept of DWs was introduced in the late 1980s by IBM researchers Barry Devlin and Paul Murphy, with the aim to deliver an architectural model to solve the flow of data to decision support environments.[22] According to the definition by Inmon, “a data warehouse is a subject-oriented, nonvolatile, integrated, time-variant collection of data in support of management decisions.”[23] Formally, a DW is a large data repository wherein data can be stored and integrated from various sources in a well-structured manner and help in the decision-making process via proper data analytics.[24] The process of compiling information into a DW is known as data warehousing.

In enterprise data management, data warehousing is referred to as a set of decision-making systems targeted toward empowering the information specialist (leader, administrator, or analyst) to improve decision making and make decisions quicker. Hence, DW systems act as an important tool of BI, being used in enterprise data management by most medium and large organizations.[25][26] The past decade has seen unprecedented development both in the number of products and services offered and in the wide-scale adoption of these advancements by the industry. According to a comprehensive research report by Market Research Future (MRFR) titled “Data Warehouse as a Service Market information by Usage, by Deployment, by Application and Organization Size—forecast to 2028,” the market size will reach USD 7.69 billion, growing at a compound annual growth rate of 24.5%, by 2028.[27]

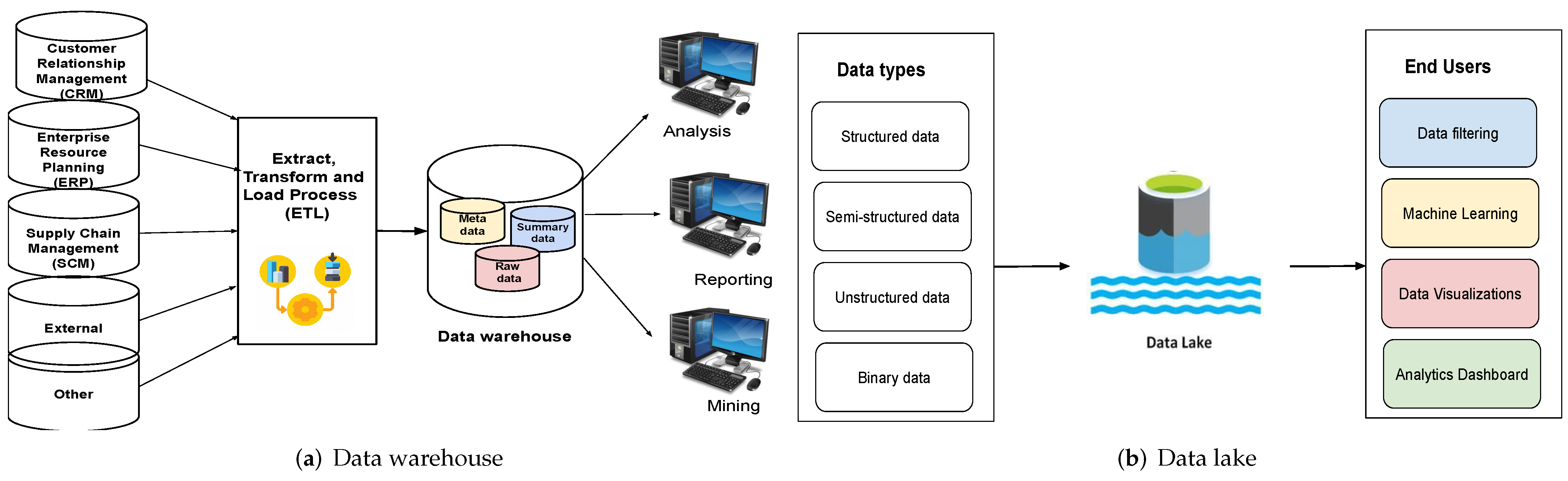

In the DW framework, data are periodically extracted from programs that aid in business operations and duplicated onto specialized processing units. They may then be approved, converted, reconstructed, and augmented with input from various options. The developed DW then becomes a primary origin of data for the production, analysis, and presentation of reports via instantaneous reports, e-portals, and digital readouts. It employs “online analytical processing” (OLAP), whose utility and execution needs differ from those of the “online transaction processing” (OLTP) implementations typically backed up by functional databases.[28][29] OLTP programs often computerize the handling of administrative data processes, such as order entry and banking transactions, which are an organization’s necessary activities. DWs, on the other hand, are primarily concerned with decision assistance. As shown in Figure 1a, a DW integrates data from various sources and helps with analysis, data mining, and reporting. A detailed description of a DW’s architecture is presented in the next major section.

|

DW advancements have benefited various sectors, including production (for supply shipment and client assistance), business (for profiling of clients and stock governance), monetary administration (for claims investigation, risk assessment, billing examination, and detecting fraud), logistics (for vehicle administration), broadcast communications (in order to analyze calls), utility companies (in order to analyze power use), and medical services.[30] The field of data warehousing has seen immense research and development over the last two decades in various research categories such as DW architecture, design, and evolution.

Data lake

By the beginning of the twenty-first century, new types of diverse data were emerging in ever-increasing volumes on the internet and at its interface to the enterprise (e.g., web-based business transactions, real-time streaming, sensor data, and social media). With the huge amount of data around, the need to have better solutions for storing and analyzing large amounts of semi-structured and unstructured data to gain relevant information and valuable insight became apparent. Traditional schema-on-write approaches such as the extract, transform, and load (ETL) process are too inefficient for such data management requirements. This gave rise to another popular modern enterprise data management scheme, the DL.[31][32][33]

DLs are centralized storage repositories that enable users to store raw, unprocessed data in their original format, including unstructured, semi-structured, or structured data, at scale. These help enterprises to make better business decisions via visualizations or dashboards from big data analysis, ML, and real-time analytics. A pictorial representation of a DL is given in Figure 1b, above.

According to Dixon, “whilst a data warehouse seems to be a bottle of water cleaned and ready for consumption, then 'Data Lake' is considered as a whole lake of data in a more natural state.”[34] Another definition for the DL is provided by King[35], as a mechanism that “stores disparate information while ignoring almost everything.” An explanation of DLs from an architectural viewpoint is given by Alrehamy and Walker[36]: “A data lake uses a flat architecture to store data in its raw format. Each data entity in the lake is associated with a unique identifier and a set of extended metadata, and consumers can use purpose-built schemas to query relevant data, which will result in a smaller set of data that can be analyzed to help answer a consumer’s question.” A DL houses data in its original raw form. The data in DLs can vary drastically in size and structure, and they lack any specific organizational structure. A DL can accommodate either very small or huge amounts of data as required. All of these features provide flexibility and scalability to DLs. At the same time, challenges related to the implementation and data analytics associated with DLs also arise.

DLs are becoming increasingly popular for organizations to store their data in a centralized manner. A DL may contain unstructured or multi-structured data, where most of them may have unrealized value for the enterprise. This allows organizations to store their data from different sources without any overhead related to the transformation of the data.[31] This also allows ad hoc data analyses to be performed on this data, which can then be used by organizations to drive key insights and data-driven decision making. DLs replace the previous way of organizing and processing data from various sources with a centralized, efficient, and flexible repository that allows organizations to maximize their gains from a data-driven ecosystem. DLs also allow organizations to scale them to their needs. This is achieved by separating storage from the computational part. Complex transformation and preprocessing of data in the case of DWs is eliminated. The upfront financial overhead of data ingestion is also reduced. Once data are collated in the lake or hub, it is available for analysis for the organization.

The differences between data warehouses and data lakes

Although DWs and DLs are used as two interchangeable terms, they are not the same.[22] One of the major differences between them is the different structures (i.e., processed vs. raw data). A DW stores data in processed and filtered form, whereas a DL stores raw or unprocessed data. Specifically, data are processed and organized into a single schema before being put into the warehouse, whereas raw and unstructured data are fed into a DL. Analysis is performed on the cleansed data in the warehouse. On the contrary, in a DL, data are selected and organized as and when needed.

As for storing processed data, a DW is economical. On the contrary, DLs have a comparatively larger capacity than the DW and are ideal for raw and unprocessed data analysis and employing ML. Another key difference is the objective or purpose of use. Typically, processed data that flow into DWs are used for specific purposes, and hence the storage space will not be wasted, whereas the purpose of usage for the DL is not defined and can ideally be used for any purpose. To use processed or filtered data, no specialized expertise is required, as merely familiarization with the presentation of data (e.g., charts, sheets, tables, and presentations) will do. Hence, DWs can be used by any business or individual. On the contrary, it is comparatively difficult to analyze DLs without familiarity with unprocessed data, hence requiring data scientists with appropriate skills or tools to comprehend them for specific business use. Accessibility or ease of use of data repositories is yet another aspect that differentiates DWs and DLs. Since the architecture of a DL has no proper structure, it has flexibility of use. Instead, the structure of a DW makes sure that no foreign particles invade it, and it is very costly to manipulate. This feature makes it very secure, too. A detailed analysis of the differences between DWs and DLs is given in Table 1.

| ||||||||||||||||||||||||||||||||||||||||||

A literature review of data warehouses and data lakes

A summary of various research works in the field of DWs and DLs is presented here. Table 2 presents a list of various survey articles on DWs and DLs. Mainly, DW review works address architecture modeling and its comparisons[37][38], the evolution of the DW concept[39], real-time data warehousing and ETL[40], etc. Compared with the DW literature reviews, DL papers are relatively fewer in number. DL review works summarize recent approaches and the architecture of DLs[33][34] as well as the design and implementation aspects.[31] To the best of our knowledge, only one work on comparing DWs and DLs was found in the literature.[12] In contrast to that article, our work provides a comprehensive analysis of both data management schemes by addressing various aspects, such as definitions, architecture, practical design considerations, tools and services, challenges, and opportunities in detail. In addition to the survey papers, we also consolidate various works on DWs and DLs in the reported literature and classify them in Table 3 based on their functions and utility.

| |||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Architecture

In this section, the architectures of the DW and DL schemes are described in detail. Furthermore, the classification of DW and DL solutions based on function is carried out and summarized as a table.

Data warehouse architecture

The DW architecture contains historical and commutative data from multiple sources. Basically, there are three kinds of architectures[57]:

- Single-tier architecture: This kind of single-layer model minimizes the amount of data stored. It helps remove data redundancy. However, its disadvantage is the lack of a component that separates analytical and transactional processing. This kind of architecture is not frequently used in practice.

- Two-tier architecture: This model separates physically available sources and the DW by means of a staging area. Such an architecture makes sure that all data loaded into the warehouse are in an appropriate cleansed format. Nevertheless, this architecture is not expandable nor can it support many end users. Additionally, it has connectivity problems due to network limitations.

- Three-tier architecture: This is the most widely used architecture for DWs.[58][59] It consists of a top, middle, and bottom tier. In the bottom tier, data are cleansed, transformed, and loaded via backend tools. This tier serves as the database of the DW. The middle tier is an OLAP server that presents an abstract view of the database by acting as a mediator between the end user and the database. The top tier, the front-end client layer, consists of the tools and an application programming interface (API) that are used to connect and get data out from the DW (e.g., query tools, reporting tools, managed query tools, analysis tools, and data mining tools).

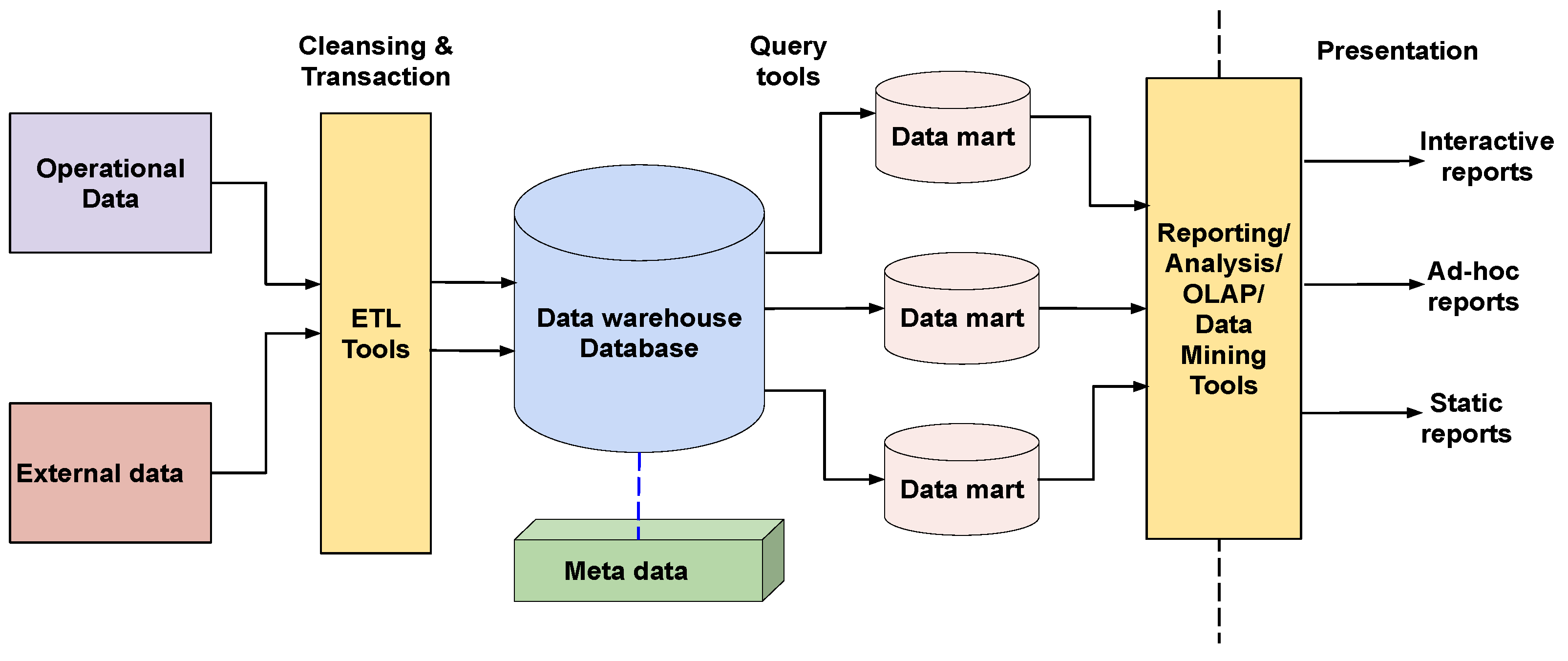

The architecture of a DW is shown in Figure 2. It consists of a central information repository that is surrounded by some key DW components, making the entire environment functional, manageable, and accessible.

|

Various components of the DW (from Figure 2) are now described.

Data warehouse database: The core foundation of the DW environment is its central database. This is implemented using relational database management system (RDBMS) technology.[60] However, there is a limitation to such implementations, since the traditional RDBMS system is optimized for transactional database processing and not for data warehousing. In this regard, the alternative means are (1) the usage of relational databases in parallel, which enables shared memory on various multiprocessor configurations or parallel processors, (2) new index structures to get rid of relational table scanning and improve the speed, and (3) multidimensional databases (MDDBs) used to circumvent the limitations caused by the relational DW models.

Extract, transform, and load (ETL) tools: All the conversions, summarizations, and changes required to transform data into a unified format in the DW are carried out via ETL tools.[61] This ETL process helps the DW achieve enhanced system performance and BI, timely access to data, and a high return on investment:

- Extraction: This involves connecting systems and collecting the data needed for analytical processing.

- Transformation: The extracted data are converted into a standard format.

- Loading: The transformed data are imported into a large DW.

ETL anonymizes data as per regulatory stipulations, thereby anonymizing confidential and sensitive information before loading it into the target data store.[62] ETL eliminates unwanted data in operational databases from loading into DWs. ETL tools carry out amendments to the data arriving from different sources and calculate summaries and derived data. Such ETL tools generate background jobs, Cobol programs, shell scripts, etc. that regularly update the data in the DW. ETL tools also help with maintaining the metadata.

Metadata: Metadata is the data about the data that define the DW.[63] It deals with some high-level technological concepts and helps with building, maintaining, and managing the DW. Metadata plays an important role in transforming data into knowledge, since it defines the source, usage, values, and features of the DW and how to update and process the data in a DW. This is the most difficult tool to choose due to the lack of a clear standard. Efforts are being made among data warehousing tool vendors to unify a metadata model. One category of metadata known as technical metadata contains information about the warehouse that is used by its designers and administrators, whereas another category called business metadata contains details that enable end users to understand the information stored in the DW.

Query tools: Query tools allow users to interact with the DW system and collect information relevant to businesses to make strategic decisions. Such tools can be of different types:

- Query and reporting tools: Such tools help organizations generate regular operational reports and support high-volume batch jobs such as printing and calculating. Some popular reporting tools are Brio, Oracle, Powersoft, and SAS Institute. Similarly, query tools help end users to resolve pitfalls in SQL and database structure by inserting a meta-layer between the users and the database.

- Application development tools: In addition to the built-in graphical and analytical tools, application development tools are leveraged to satisfy the analytical needs of an organization.

- Data mining tools: This tool helps in automating the process of discovering meaningful new correlations and structures by mining large amounts of data.

- OLAP tools: OLAP tools exploit the concepts of a multidimensional database and help analyze the data using complex multidimensional views.[29][64] There are two types of OLAP tools: multidimensional OLAP (MOLAP) and relational OLAP (ROLAP).[65] With MOLAP, a cube is aggregated from the relational data source. Based on the user report request, the MOLAP tool generates a prompt result, since all the data are already pre-aggregated within the cube.[66] In the case of ROLAP, the ROLAP engine acts as a smart SQL generator. It comes with a “designer” piece, wherein the administrator specifies the association between the relational tables, attributes, and hierarchy map and the underlying database tables.[67]

Data lake architecture

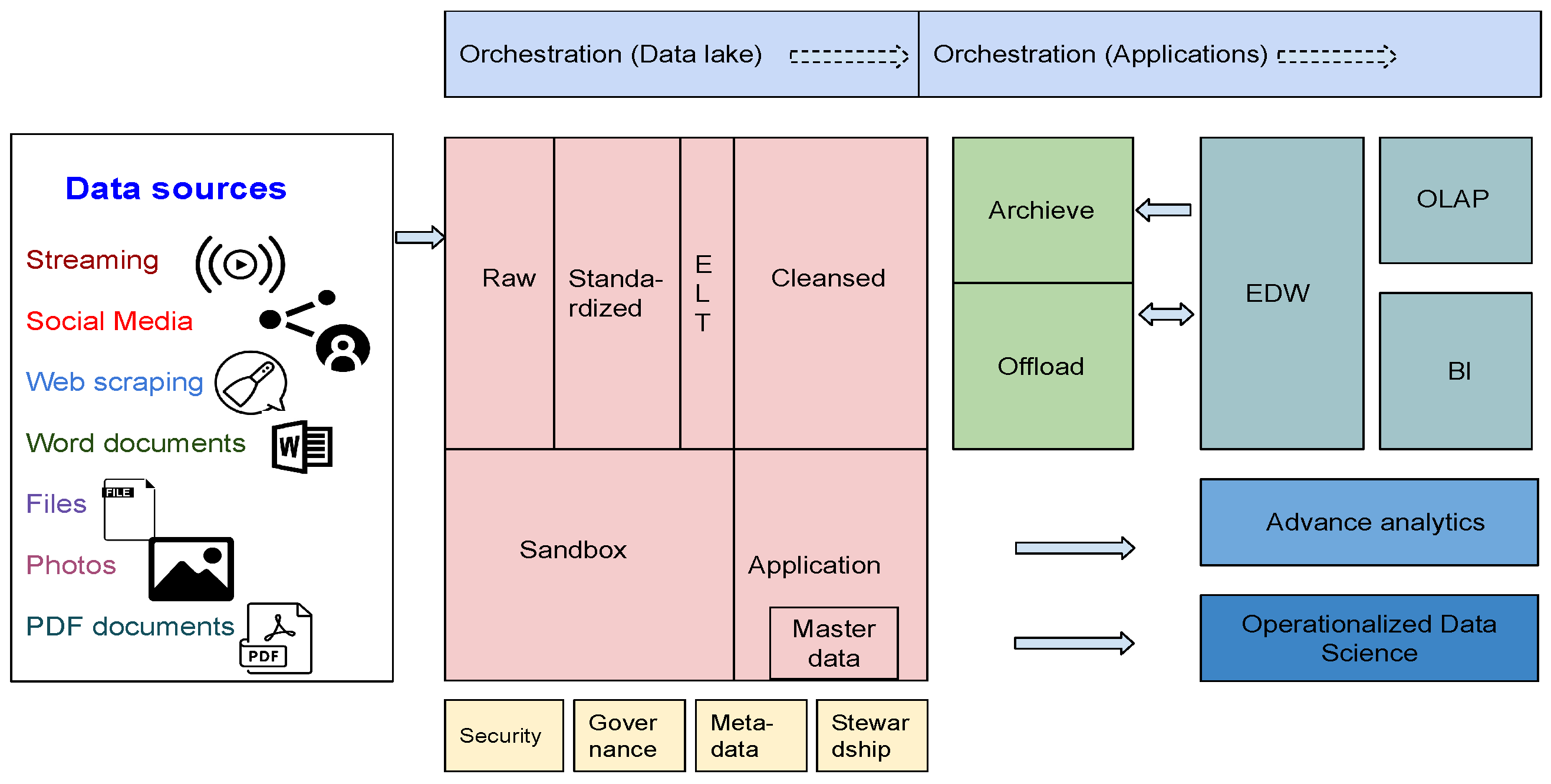

The architecture of a business DL is depicted in Figure 3. Although it is treated as a single repository, it can be distinguished as separate layers in most cases.

|

Various components of the DL (from Figure 3) are now described.

Raw data layer: This layer is also known as the ingestion layer or landing area because it acts as the sink of the DL. The prime goal is to ingest raw data as quickly and as efficiently as possible. No transformations are allowed at this stage. With the help of the archive, it is possible to get back to a point in time with raw data. Overriding (i.e., handling duplicate versions of the same data) is not permitted. End users are not granted access to this layer. These are not ready-to-use data, and they need a lot of knowledge in terms of relevant consumption.

Standardized data layer: This is optional in most implementations. If one expects fast growth for his or her DL architecture, then this is a good option. The prime objective of the standardized layer is to boost the performance of the data transfer from the raw layer to the curated layer. In the raw layer, data are stored in their native format, whereas in the standardized layer, the appropriate format that fits best for cleansing is selected.

Cleansed layer or curated layer: In this layer, data are transformed into consumable data sets and stored in files or tables. This is one of the most complex parts of the whole DL solution since it requires cleansing, transformation, denormalization, and consolidation of different objects. Furthermore, the data are organized by purpose, type, and file structure. Usually, end users are granted access only to this layer.

Application layer: This is also known as the trusted layer, secure layer, or production layer. This is sourced from the cleansed layer and enforced with requisite business logic. In case the applications use ML models on the DL, they are obtained from here. The structure of the data is the same as in the cleansed layer.

Sandbox data layer: This is also another optional layer that is meant for analysts’ and data scientists’ work to carry out experiments and search for patterns or correlations. The sandbox data layer is the proper place to enrich the data with any source from the internet.

Security: While DLs are not exposed to a broad audience, the security aspects are of great importance, especially during the initial phase and architecture. These are not like relational databases, which have an artillery of security mechanisms.

Governance: Monitoring and logging operations become crucial at some point while performing analysis.

Metadata: This is the data about data. Most of the schemas reload additional details of the purpose of data, with descriptions on how they are meant to be exploited.

Stewardship: Based on the scale that is required, either the creation of a separate role or delegation of this responsibility to the users will be carried out, possibly through some metadata solutions.

Master data: This is an essential part of serving ready-to-use data. It can be either stored on the DL or referenced while executing ETL processes.

Archive: DLs keep some archive data that come from data warehousing. Otherwise, performance and storage-related problems may occur.

Offload: This area helps to offload some time- and resource-consuming ETL processes to a DL in case of relational data warehousing solutions.

Orchestration and ETL processes: Once the data are pushed from the raw layer through the cleansed layer and to the sandbox and application layers, a tool is required to orchestrate the flow. Either an orchestration tool or some additional resources to execute them are leveraged in this regard.

Many implementations of a DL are originally based on Apache Hadoop. The Highly Available Object Oriented Data Platform (Hadoop) is a widely popular big data tool especially suitable for batch processing workloads of big data.[68] It uses the Hadoop Distributed File System (HDFS) as its core storage and MapReduce (MR) as the basic computing model. Novel computing models are constantly proposed to cope with the increasing needs for batch processing performance (e.g., Tez, Spark, and Presto).[69][70] The MR model has also been replaced with the directed acyclic graph (DAG) model, which improves computing models’ abstract concurrency.

The second phase of DL evolution has happened with the arrival of Lambda Architecture[71][72], owing to the constant changes in data processing capabilities and processing demand. It presents stream computing engines, such as Storm, Spark Streaming, and Flink.[73] In such a framework, batch processing is combined with stream computing to meet the needs of many emerging applications. Yet another advanced phase is seen with Kappa Architecture.[74] The two models of batch processing and stream computing are unified by improving the stream computing concurrency and increasing the time window of streaming data. In this regard, stream computing is used that features an inherent and scalable distributed architecture.

Design aspects

The design aspects and practical implementation constraints are to be studied in detail to develop a suitable data management solution. This section presents the design aspects to be considered in DW- and DL-based enterprise data management.

Data warehouse design considerations for business needs

To design a successful DW, one should also realize the requirements of an organization and develop a framework for them. There are some key criteria to keep in mind when choosing a DW. The very first design consideration in a DW is the business and user needs. Hence, during the designing phase, the integration of the DW with existing business processes and compatibility checks with long-term strategies have to be ensured. Enterprises have to clearly comprehend the purpose of their DW, any technical requirements, benefits of end users from the system, improved means of reporting for BI, and analytics. In this regard, finding the notion of what information is important to the business is quintessential to the success of the DW. To facilitate this, creating an appropriate data model of the business is a key aspect when designing DWs (e.g., SQL Developer Data Modeler [SDDM]). Furthermore, a data flow diagram can also help in depicting the data flow within the company in diagram format.

While designing a DW, yet another important practical consideration is to leverage a recognized DW modeling standard (e.g., 3NF, star schema (dimensional), and Data Vault).[75] Selecting such a standard architecture and sticking to the same one can augment the efficiency within a DW development approach. Similarly, an agile DW methodology is also an important practical aspect. With proper planning, DW projects can be compartmentalized to smaller pieces capable of delivering faster. This design trick helps to prioritize the DW as a business’s needs change.

The choice of storage is also an important consideration. Enterprises can opt for either on-premises architecture or a cloud DW.[13] On-premises architectures require setting up the physical environment, including all the servers necessary to power ETL processes, storage, and analytic operations, whereas third-party cloud implementations can skip this step. However, a few circumstances exist where it still makes sense to consider an on-premises approach. For example, if most of the critical databases are on-premises and are old enough, they will not work well with cloud-based DWs. Furthermore, if the organization has to deal with strict regulatory requirements, which might include no offshore data storage, an on-premises setting might be the better choice. Nevertheless, cloud-based services provide the most flexible data warehousing service in the market in terms of storage and cloud's pay-as-you-go nature.

The organization’s ecosystem also plays a key role. Adopting a DW automation tool ensures the efficient usage of IT resources, faster implementation through projects, and better support by enforcing coding standards (e.g., Wherescape, AnalytixDS, Ajilius). The data modeling planning step imparts detailed, reusable documentation of a DW’s implementation. Specifically, it assesses the data structures, investigates how to efficiently represent these sources in the DW, specifies OLAP requirements, etc.

Selection of the appropriate ETL or extract, load, and transform (ELT) solution is yet another design concern.[40] When businesses use expensive in-house analytics systems, much prep work including transformations can be conducted, as in the ETL scheme. However, ELT is a better approach when the destination is a cloud-based DW. Once data are collocated, the power of a single cloud engine can be leveraged to perform integrations and transformations efficiently. Organizations can transform their raw data at any time according to their use case, rather than a step in the data pipeline.

Semantic and reporting layers represent another consideration. Based on previously documented data models, an OLAP server is implemented to facilitate the analytical queries of the users and to empower BI systems. In this regard, data engineers should carefully consider time-to-analysis and latency requirements to assess the analytical processing capabilities of the DW. Similarly, while designing the reporting layer, the implementation of reporting interfaces or delivery methods as well as permissible access have to be set by the administrator.

Finally, ease of scalability should be considered. Understanding current business needs is critical to BI and decision making. This includes how much data the organization currently has and how quickly its needs are likely to grow. Staffing and vendor costs need to be taken into consideration while deciding the scale of growth.

Data lake design aspects for enterprise data management

At a high level, the concept of a DL seems to be simple. Irrespective of the format, it stores data from multiple sources in one place, leverages big data technologies, and deploys on a commodity infrastructure. However, many a time, reality may fail due to various practical constraints. Hence, it is quite important to consider several key criteria while designing an enterprise DL. Of primary concern is focusing on business objectives rather than technology. By anchoring the business objectives, a DL can prioritize the efforts and outcomes accordingly. For instance, for a particular business objective, there may be some data that are more valuable than others. This kind of comprehension and analysis is the key to an enterprise’s DL success. With such an oriented goal, DLs can start small and then accordingly learn, adapt, and produce accelerated outcomes for a business. In particular, some key factors in this regard are (1) whether it solves an actual business problem, (2) if it imparts new capabilities, and (3) the access or ownership of data, among others.

Scalability and durability are two more major criteria to consider.[76] Scalability enables scaling to any size of data while importing them in real time. This is an essential criterion for a DL since it is a centralized data repository for an entire organization. Durability deals with providing consistent uptime while ensuring no loss or corruption of data.

Another key design aspect in a DL is its capability to store unstructured, semi-structured, and structured data, which helps organizations to transfer anything from raw, unprocessed data to fully aggregated analytical outcomes.[77] In particular, the DL has to deliver business-ready data. Practically speaking, data by themselves have no meaning. Although file formats and schemas can parse the data (e.g., JSON and XML), they fail at delivering insight into their meaning. To circumvent such a limitation, a critical component of any DL technical design is the incorporation of a knowledge catalog. Such a catalog helps in finding and understanding information assets. The knowledge catalog’s contents include the semantic meaning of the data, format and ownership of data, and data policies, among other elements.

Security considerations are also of prime importance in a DL in the cloud. The three domains of security are encryption, network-level security, and access control. Network-level security imparts a robust defense strategy by denying inappropriate access at the network level, whereas encryption ensures security at least for those types of data that are not publicly available. Security should be part of DL design from the beginning. Compliance standards that regulate data protection and privacy are incorporated in many industries, such as the Payment Card Industry Data Security Standard (PCI DSS) for financial services and Health Insurance Portability and Accountability Act (HIPAA) for healthcare.[78] Furthermore, two of the biggest regulations regarding consumer privacy—California’s Consumer Privacy Act (CCPA) and the European Union’s General Data Protection Regulation (GDPR)—restrict the ownership, use, and management of personal and private data.

A DL design must also include metadata storage functionality to help users to search and learn about the data sets in the lake.[79] A DL allows the storage of all data that are independent of the fixed schema. Instead, data are read at the time of processing, should they be parsed and adapted into a schema, only as necessary. This feature saves plenty of time for enterprises.

Architecture in motion is another interesting concept (i.e., the architecture will likely include more than one DL and must be adaptable to address changing requirements). For instance, on-premises work with Hadoop could be moved to the cloud or a hybrid platform in the future. By facilitating the innovation of multi-cloud storage, a DL can be easily upgraded to be used across data centers, on premises, and in private clouds. In addition, ML and automation can augment the data flow capabilities of an enterprise’s DL design.

Tools and utilities

In this section, we categorize and detail the popular DW and DL tools and services.

Popular data warehouse tools and services

An enterprise DW is one of the primary components of BI.[14][16] It stores data from one or more heterogeneous sources and then analyzes and extracts insights from them to support decision making. Some of the popular top data warehousing tools are explained.

Amazon Web Services (AWS) data warehouse tools: AWS is one of the major leaders in data warehousing solutions.[80] AWS has many services, such as AWS Redshift, AWS S3, and Amazon Relational Database Service (ARDS), making it a very cost-effective and highly scalable platform. AWS Redshift is a suitable platform for businesses that require advanced capabilities that exploit high-end tools.[81] It consists of an in-house team that organizes AWS’s extensive menu of services. Amazon Simple Storage Service (AWS S3) is a low-cost storage solution with industry-leading scalability, performance, and security features. ARDS is an AWS cloud data storage service that runs and scales a relational database. It has resizable and cost-effective technology that facilitates an industry-standard relational database and manages all database management activities.

Google data warehouse tools: Google is highly acclaimed for its data management skills along with its dominance as a search engine. Google’s DW tools excel in cutting-edge structured data management and analytics by incorporating machine intelligence.[82] Google BigQuery is a business-level cloud-based data warehousing solution platform specially designed to save time by storing and querying large data sets through using super-fast SQL searches against multi-terabyte data sets in seconds, offering customers real-time data insights. Google Cloud Data Fusion is a cloud ETL solution which is entirely managed and allows data integration at any size with a visual point-and-click interface. Dataflow is another cloud-based data-processing service that can be used to stream data in batches or in real time. Google Data Studio enables turning the data into entirely customizable, easy-to-read reports and dashboards.

Microsoft Azure Data Warehouse tools: Microsoft Azure is a recent cloud computing platform that provides infrastructure as a service (IaaS), platform as a service (PaaS), and software as a service (SaaS) as well as 200+ products and cloud services.[83] Azure SQL Database is suitable for data warehousing applications with up to 8 TB of data volume and a large number of active users, facilitating advanced query processing. Azure Synapse Analytics consists of data integration, big data analytics, and enterprise data warehousing capabilities by also integrating ML technologies.

Oracle Autonomous Data Warehouse: Oracle Autonomous Data Warehouse[84] is a cloud-based DW service that manages the complexities associated with DW development, data protection, data application development, etc. The setting, safeguarding, regulating, and backing up of data are all automated using this technology. This cloud computing solution is easy to use, secure, quick to respond, and scalable.

Snowflake: Snowflake[85] is a cloud-based DW tool offering a quick, easy-to-use, and adaptable DW platform. It has a comprehensive SaaS architecture since it runs entirely in the cloud. This makes data processing easier by permitting users to work with a single language—SQL—for data blending, analysis, and transformations on a variety of data types. Snowflake’s multi-tenant design enables real-time data exchange throughout the enterprise without relocating data.

IBM Data Warehouse tools: IBM is a preferred choice for large business clients due to its huge install base, vertical data models, various data management solutions, and real-time analytics.[86] IBM DB2 Warehouse is a cloud DW that enables self-scaling data storage and processing and deployment flexibility. Another tool is IBM Datastage, which can take data from a source system, transform it, and feed it into a target system. This enables the users to merge data from several corporate systems using either an on-premises or cloud-based parallel architecture.

Popular data lake tools and services

A DL stores structured data from relational databases, where semi-structured data, unstructured data, and binary data and can be set up on-premises or in the cloud.[87][88] Some of the most popular DL tools and services are analyzed.

Azure Data Lake: Azure Data Lake makes it easy for developers and data scientists to store data of any size, shape, and speed and conduct all types of processing and analytics across platforms and languages.[89] It removes the complexities associated with ingesting and storing the data and makes it faster to bring up and execute with batch, streaming, and interactive analytics.[90] Some of the key features of Azure Data Lake include unlimited scale and data durability, on-par performance even with demanding workloads, high security with flexible mechanisms, and cost optimization through independent scaling of storage.

AWS: AWS claims to provide “the most secure, scalable, comprehensive, and cost-effective portfolio of services for customers to build their data lake in the cloud.”[91] AWS Lake Formation helps to set up a secure DL that can collect and catalog data from databases and object storage, move the data into the new Amazon Simple Storage Service (S3) DL, and clean and classify the data using ML algorithms. It offers various aspects of scalability, agility, and flexibility that are required by the companies to fuse data and analytics approaches. AWS customers include NETFLIX, Zillow, NASDAQ, Yelp, and iRobot.[92]

Google BigLake: BigLake is a storage engine that unifies DWs and DLs. It removes the need to duplicate or move data, thus making the system efficient and cost-effective. BigLake provides detailed access controls and performance acceleration across BigQuery and multi-cloud DLs, with open formats to ensure a unified, flexible, and cost-effective lakehouse architecture. The top features of BigLake include (1) users being able to enforce consistent access controls across most analytics engines with a single copy of data and (2) unified governance and management at scale. Users can extend BigQuery to multi-cloud DLs and open formats with fine-grained security controls without setting up a new infrastructure.[93]

Cloudera: Cloudera SDX is a DL service for creating safe, secure, and governed DLs with protective rings around the data wherever they stored, from object stores to the HDFS. It provides the capabilities needed for (1) data schema and metadata information, (2) metadata governance and management, (3) data access authorization and authentication, and (4) compliance-ready access auditing.[94]

Snowflake: Snowflake’s cross-cloud platform breaks down silos and enables a DL strategy. Data scientists, analysts, and developers can seamlessly leverage governed data self-service for a variety of workloads. The key features of Snowflake include (1) all data on one platform that combines structured, semi-structured, and unstructured data of any format across clouds and regions; (2) fast, reliable processing and querying, simplifying the architecture with an elastic engine to power many workloads; and (3) secure collaboration via easy integration of external data without ETL.[95]

Challenges

This section addresses some of the key challenges in big data analytics problems. In addition, the implementation challenges encountered in DW and DL paradigms are also critically analyzed.

Challenges in big data analytics

In the past few years, big data have been accumulated in every walk of human life, including healthcare, retail, public administration, and research. Web-based applications have to deal with big data frequently, such as internet text and documents (corpus, etc.), social network analysis, prediction markets, and internet search indexing.[96] Although we can clearly observe the potential and current advantages of big data, there are some inherent challenges also present that have to be tackled to achieve the full potential of big data analytics.[97]

The first hurdle for big data analytics is the storage mediums and higher I/O speed.[98] Storage of big data causes a financial overhead which is not affordable or profitable for many enterprises. Furthermore, this also results in slower processes.[99] In decades gone by, analysts made use of hard disk drives for data storage purposes, but this is slower in terms of random I/O performance compared with sequential I/O. To overcome this limitation, the concept of solid-state drives (SSDs) and phase change memory were introduced. However, the currently available storage tech simply does not possess the required performance for processing big data and delivering insights in a timely fashion. Companies opt for various modern techniques to handle large data sets, such as compression (reducing the number of bits within the data), data tiering (storing data in several storage tiers), and deduplication (the process of removing duplicates and unwanted data).

Anther challenge is the lack of proper understanding of big data and the lack of knowledge professionals. Due to insufficient understanding, organizations may fail in big data initiatives. This may be due to the absence of skilled data professionals, the lack of a transparent picture for employees, or improper usage of data repositories, among other reasons. It is highly encouraged to conduct big data workshops and seminars at companies to enable every level of the organization to inculcate a basic understanding of knowledge concepts. Furthermore, companies should invest in recruiting skilled professionals, supplying training programs to the staff, as well as purchasing knowledge analytics solutions powered by advanced AI or ML tools.

Yet another challenge in big data analytics is the confusion with suitable tool selection. For instance, many a time, it is not so clear whether Hadoop or Spark is a better option for data analytics and storage. Sometimes, the wrong selection may result in poor decisions and the selection of inappropriate technology. Hence, money, time, effort, and work hours are wasted. The best solution would be to make use of experienced professionals or data consulting to obtain a recommendation for the tools that can support a company based on its scenario.

Data in a corporation come from various sources, such as customer logs, financial reports, social media platforms, e-mails, and reports created by employees. Integrating data from such a huge spread of sources is another challenging task.[100] This consolidation task, known as data integration, is crucial for BI. Hence, enterprises purchase proper tools for data integration purposes. Talend Data Integration, IBM InfoSphere Xplenty, Informatica PowerCenter, and Microsoft SQL QlikView are some of the popular data integration tools.[101]

Security of huge sets of knowledge, especially ones that involve many confidential details of customers, is one of the inevitable challenges in big data analytics.[102][103] The careless treatment of data repositories may invite malicious hackers, which can cost millions for a stolen record or a knowledge breach. The remedy would be to foster a cybersecurity division of a company to guard their data and to implement various security actions such as data encryption, data segregation, identity and access control, implementation of endpoint security, real-time security monitoring, and using big data security tools (e.g., IBM Guardian).

Data warehouse implementation challenges

Implementation of a DW requires proper planning and execution based on proper methods. Some of the major challenging considerations that arise with data warehousing are design, construction, and implementation.[104][105]

The efficiency and working of a DW are dependent on the data that support its operations. With incorrect or redundant data, DW managers cannot accurately measure the exact costs. A key solution is to automate the system to improve the lead data quality and make sure that the sales team receives complete, correct, and consistent lead information. Another major concern in a DW is the quality control of data (i.e., quality and consistency of data).[106] The BI process can be fine-tuned by incorporating flexibility to accept and integrate analytics as well as update the warehouse’s schema to handle evolutions.

Another major challenge is differences in naming, domain definitions, and identification numbers from heterogeneous sources. The DW has to be designed in such a way that it can accommodate the addition and attrition of data sources and the evolution of the sources and source data, thus avoiding major redesign. Yet another challenge is customizing the available source data into the data model of the DW because the capabilities of a DW may change over time based on the change in technology.[107] Further, broader skills are required for the administration of DWs in traditional database administration. Hence, managing the DW in a large organization, the design of the management function, and selecting the management team for a database warehouse are some of the important aspects of a DW.

Data security is another critical requirement in DWs, given that business data are extremely sensitive and can be easily obtained.[108] Unfortunately, the typical security paradigm—based on tables, lines, and characteristics—is incompatible with DWs. Following that, the model should be changed to one that is firmly integrated with the applicable model and is focused on the key notions of multidimensional display, such as facts, aspects, and measures. Furthermore, as is frequently advised in computer programming, information security should be considered at all stages of the improvement process, from prerequisite analysis to execution and upkeep. In addition, DW governance is yet another important consideration, which includes approval of the data modeling standards and metadata standards, the design of a data access policy, and a data backup strategy.[109]

Data lake implementation challenges

The DL is relatively novel technology and has not matured yet. Hence, there are many challenges in its implementation, including many of the same challenges that early DWs confronted.[77][110] The first challenge is the high cost of DLs. They are expensive to implement and maintain. DL platforms that exploit the cloud may be easier to deploy, but they may also come with high fees. Some of the platforms such as Hadoop are open-source and hence free of cost. Nevertheless, the implementation and management may take more time and more expert staff. Management difficulty is another issue.[77] The management of the DL involves various complex tasks, such as ensuring the capacity of the host infrastructure to cope with the growth of the DL and dealing with data redundancy and data security. This puts forth challenges even to skilled engineers. Furthermore, it is required to have more domain experts and engineers with real expertise in setting up and managing DLs. In the current scenario, there is a shortage of both data scientists and data engineers in the field. This lack of skills is yet another challenge.

Another aspect for consideration is the long time to value (i.e., it takes years to become full-fledged and to be integrated well with the workflow and analytics tools to impart real value to the enterprise).[111] As mentioned in the case of DWs, in the case of DLs, data security is also a major concern. It requires special security measures to be considered to enforce data governance rules and to secure the data in the DL with the help of cybersecurity specialists and security tools. Another critical challenge is the computation resources and increase in computing power. This is due to the fact that data are growing unprecedentedly faster than computing power. At the same rate, the existing computers are not well equipped to host and manage them at the same rate due to a lack of power. Similarly, open-source data platforms also find many core problems surrounding DLs which are too costly to manage. This also requires massive computing power to overcome such serious skill gaps.

To build a better DL, modernization of the way businesses build and manage DLs is required. One key takeaway is to take full advantage of the cloud, as opposed to building cumbersome DLs on a tailor-made infrastructure.[112] It helps to get rid of data silos and to build DLs that are applicable to various use cases, rather than only fitting them to a certain range of needs.

Opportunities and future directions

Based on our survey, we discuss novel trends in modern enterprise data management and point out some promising directions for future research in this section.

Data warehouses: Opportunities and future directions

The business management landscape has witnessed a massive change with the emergence of the DW. The advancements in cloud technology, the IoT, and big data analytics have brought effective data solutions to modern DWs.[79][113] With the rapid evolution of technology, many enterprises have migrated their data to the cloud to expand their networks and markets. Cloud DWs help to overcome the huge costs of purchasing, infrastructure, installation, etc.[114] Hence, in the coming years, more sophisticated technology in cloud DWs is envisaged to enhance intense, easy-to-use, and economical data clouds as well. The long-term gains for the adoption of cloud warehousing are mainly data availability and scalability. The flexibility to store a variety of data formats—not just relational—combined with the intrinsic flexibility of cloud-based services enables a very broad distribution of cloud services.

Another massive change is in the means of data analytics. In contrast to the older times, wherein data analytics and BI occurred in two different divisions, which delayed the overall efficiency of the system, the modern DW provides an advanced structure for storage and faster data flow, thus making them easily accessible for business users. Such an agility model is powered by data fragmentation, allowing access to and the analysis of data across the enterprise in real time.

Another big advancement is found in IoT platforms used for sharing and storing data. This has changed the face of data streaming by enabling users to store and access data across multiple devices. The concept of the IoT is more pertinent to the real world due to the increasing popularity of mobile devices, embedded and ubiquitous communication technologies, cloud computing, and data analytics. In a broader sense, as with the internet, the IoT enables devices to exist in many places and facilitates applications from trivial to the most crucial. Several technologies such as computational intelligence and big data can be incorporated together with the IoT to improve data management and knowledge discovery on a large scale. Much research in this sense has been carried out by Mishra et al.[115]

In summary, the future of DWs comprises features that enable the following:

- All data are accessible from a single location;

- The capability to outsource the task of maintaining that service’s high availability to all customers;

- Governance based on policies;

- Platforms with high user experience (UX) discoverability; and

- Platforms that cater to all customers.

Data lakes: Opportunities and future directions

One of the core capabilities of a DL architecture is its ability to quickly and easily ingest multiple types of data (e.g., real-time streaming data from on-premises storage platforms, structured data generated and processed by mainframes and DWs, and unstructured or semi-structured data). The ingestion process makes use of a high degree of parallelism and low latency since it requires interfacing with external data sources with limited bandwidth. Hence, ingestion will not carry out any deep analysis of the downloaded data. However, there are possibilities for applying shallow data sketches on the downloaded contents and their metadata to maintain a basic organization of the ingested data sets.

In another phase of DL management (i.e., the data extraction stage), the raw data are transformed into a predetermined data model. Although various studies have been conducted on this topic, there still remains room for improvement. Rather than conducting extraction on one file at a time, one can take advantage of the knowledge from the history of extractions. Similarly, in the cleaning phase of the DL, not much work has not been performed in the literature other than some approaches such as CLAMS.[51] One opportunity in this regard will be to make use of the lake’s wisdom and perform collective data cleaning. In addition, it is important to investigate the possible means of errors in the lake and to get rid of them efficiently to obtain a clean DL.

The common methods to retrieve the data from the DL are query-based retrieval (i.e., a user starts a search with a query for data retrieval) and data-based retrieval (i.e., a user navigates a DL as a linkage graph or a hierarchical structure to find data of interest).[77] A new direction may be to incorporate analysis-driven or context-driven approaches (i.e., augmenting a data set with relevant data and some contextual information to facilitate learning tasks).

Another direction of research is related to the exploration of ML in DLs. Specifically, many studies are underway focusing on ML application toward data set organization and discovery. The data set discovery task is often associated with finding “similar” attributes extracted from the data, metadata, etc. which could be further coupled with classification or clustering tasks. Some recent works have leveraged ML techniques, such as the KNN classifier[116] and a logistic regression model for optimizing feature coefficients.[117] More advanced deep learning and similar sophisticated ML techniques are envisaged to augment the data set discovery process in the coming years.

Metadata management is an important task in a DL, since a DL does not come with descriptive data catalogs.[77][79] Due to the lack of such explicit metadata of data sets, especially during the discovery and cleaning of data, there is a chance for a DL to become a data swamp. Hence, it is quite necessary to extract meaningful metadata from data sources and to support efficient storage and query answering of metadata. In this field of metadata management, there remain more topics to explore further in extracting knowledge from lake data and incorporating them into existing knowledge bases. Yet another key aspect is data versioning, wherein new versions of the already existing files enter into a dynamic DL.[79] Since versioning-related operations can affect all stages of a DL, it is a crucial aspect to address. There are some large-scale data set version control tools, such as DataHub, that provide a Git-like interface to handle version creation, branching, and merging operations. Nevertheless, more research and development may be carried out further to deal with schema evolution.

As a final note, there is an emerging data management architecture trend called the "data lakehouse" that couples the flexibility of a DL with the data management capabilities of a DW. Specifically, it is considered a unique data storage solution for all data—unstructured, semi-structured, and structured—while providing the data quality and data governance standards of a DW.[118] Such a data lakehouse would be capable of imparting better data governance, reduced data movement and redundancy, efficient use time, etc., even with a simplified schema. This topic of the data lakehouse is envisaged to be an excellent research area of data management in the future.

Conclusions

Enterprises and business organizations exploit a huge volume of data to understand their customers and to make informed business decisions to stay competitive in the field. However, big data come in a variety of formats and types (e.g., structured, semi-structured and unstructured data), making it difficult for businesses to manage and use them effectively. Based on the structure of the data, typically, two types of data storage are utilized in enterprise data management: the DW and DL. Despite being used interchangeably, they are two distinct storage forms with unique characteristics that serve different purposes.

In this review, a comparative analysis of DWs and DLs was undertaken by highlighting the key differences between the two data management approaches. In particular, the definitions of the DW and DL, highlighting their characteristics and key differences, were detailed. Furthermore, the architecture and design aspects of both DWs and DLs are clearly discussed. In addition, a detailed overview of the popular DW and DL tools and services was also provided. The key challenges of big data analytics in general, as well as the challenges of implementation of DWs and DLs, were also critically analyzed in this survey. Finally, the opportunities and future research directions were contemplated. We hope that the thorough comparison of existing DWs vs. DLs and the discussion of open research challenges in this survey will motivate the future development of enterprise data management and benefit the research community significantly.

Abbreviations, acronyms, and initialisms

- AI: artificial intelligence

- API: application programming interface

- ARDS: Amazon Relational Database Service

- AWS: Amazon Web Services

- AWS S3: AWS Simple Storage Service

- BI: business intelligence

- CCPA: California Consumer Privacy Act

- DAG: directed acyclic graph

- DL: data lake

- DW: data warehouse

- ELT: extract, load, and transform

- ETL: extract, transform, and load

- GDPR: General Data Protection Regulation

- HDFS: Hadoop Distributed File System

- HIPAA: Health Insurance Portability and Accountability Act

- IaaS: infrastructure as a service

- IoT: internet of things

- MDDB: multidimensional databases

- ML: machine learning

- MOLAP: multidimensional OLAP

- MR: MapReduce

- MRFR: Market Research Future

- OLAP: online analytical processing

- OLTP: online transaction processing

- PaaS: platform as a service

- PCI DSS: Payment Card Industry Data Security Standard

- RDBMS: relational database management system

- ROLAP: relational OLAP

- SaaS: software as a service

- SDDM: SQL Developer Data Modeler

- SSD: solid-state drive

Acknowledgements

Author contributions

Conceptualization, A.N. and D.M.; methodology, A.N. and D.M.; validation, A.N.; formal analysis, D.M.; investigation, A.N.; data curation, A.N. and D.M.; writing—original draft preparation, A.N. and D.M.; writing—review and editing, A.N. and D.M.; visualization, A.N.; supervision, A.N.; project administration, A.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of interest

The authors declare no conflict of interest.

References

- ↑ Tsai, Chun-Wei; Lai, Chin-Feng; Chao, Han-Chieh; Vasilakos, Athanasios V. (1 December 2015). "Big data analytics: a survey" (in en). Journal of Big Data 2 (1): 21. doi:10.1186/s40537-015-0030-3. ISSN 2196-1115. http://www.journalofbigdata.com/content/2/1/21.

- ↑ Statista Research Department (13 June 2022). "Big data - Statistics & Facts". Statista. Archived from the original on 03 October 2022. https://web.archive.org/web/20221003013950/https://www.statista.com/topics/1464/big-data/#topicHeader__wrapper. Retrieved 27 October 2022.

- ↑ Wise, J. (12 October 2022). "Big Data Statistics 2022: Facts, Market Size & Industry Growth". Earthweb. Archived from the original on 10 November 2022. https://web.archive.org/web/20221110131001/https://earthweb.com/big-data-statistics/.

- ↑ Jain, A. (17 September 2016). "The 5 V's of big data". Watson Health Perspectives. IBM. Archived from the original on 31 March 2022. https://web.archive.org/web/20220331120927/https://www.ibm.com/blogs/watson-health/the-5-vs-of-big-data/. Retrieved 27 October 2022.

- ↑ Gandomi, Amir; Haider, Murtaza (1 April 2015). "Beyond the hype: Big data concepts, methods, and analytics" (in en). International Journal of Information Management 35 (2): 137–144. doi:10.1016/j.ijinfomgt.2014.10.007. https://linkinghub.elsevier.com/retrieve/pii/S0268401214001066.

- ↑ 6.0 6.1 Sun, Zhaohao; Zou, Huasheng; Strang, Kenneth (2015), Janssen, Marijn; Mäntymäki, Matti; Hidders, Jan et al.., eds., "Big Data Analytics as a Service for Business Intelligence" (in en), Open and Big Data Management and Innovation (Cham: Springer International Publishing) 9373: 200–211, doi:10.1007/978-3-319-25013-7_16, ISBN 978-3-319-25012-0, http://link.springer.com/10.1007/978-3-319-25013-7_16. Retrieved 2023-05-02

- ↑ "Big Data and Analytics Services Global Market Report 2022". ReportLinker. March 2022. Archived from the original on 09 June 2022. https://web.archive.org/web/20220609081444/https://www.reportlinker.com/p06246484/Big-Data-and-Analytics-Services-Global-Market-Report.html. Retrieved 27 October 2022.

- ↑ Vailshery, L.S. (21 February 2022). "Global BI & analytics software market size 2019-2025". Statista. https://www.statista.com/statistics/590054/worldwide-business-analytics-software-vendor-market/. Retrieved 27 October 2022.

- ↑ 9.0 9.1 Kumar, S. (24 December 2019). "What is a Data Repository and What is it Used for?". Stealthbits Technologies, Inc. Archived from the original on 04 October 2022. https://web.archive.org/web/20221004042118/https://stealthbits.com/blog/what-is-a-data-repository-and-what-is-it-used-for/. Retrieved 27 October 2022.

- ↑ 10.0 10.1 Khine, Pwint Phyu; Wang, Zhao Shun (2018). Eguchi, Kei; Chen, Tong. eds. "Data lake: a new ideology in big data era". ITM Web of Conferences 17: 03025. doi:10.1051/itmconf/20181703025. ISSN 2271-2097. https://www.itm-conferences.org/10.1051/itmconf/20181703025.

- ↑ 11.0 11.1 Arif, Muhammad; Mujtaba, Ghulam (31 May 2015). "A Survey: Data Warehouse Architecture". International Journal of Hybrid Information Technology 8 (5): 349–356. doi:10.14257/ijhit.2015.8.5.37. http://gvpress.com/journals/IJHIT/vol8_no5/37.pdf.

- ↑ 12.0 12.1 12.2 El Aissi, Mohamed El Mehdi; Benjelloun, Sarah; Loukili, Yassine; Lakhrissi, Younes; Boushaki, Abdessamad El; Chougrad, Hiba; Elhaj Ben Ali, Safae (2022), Bennani, Saad; Lakhrissi, Younes; Khaissidi, Ghizlane et al.., eds., "Data Lake Versus Data Warehouse Architecture: A Comparative Study" (in en), WITS 2020 (Singapore: Springer Singapore) 745: 201–210, doi:10.1007/978-981-33-6893-4_19, ISBN 978-981-336-892-7, https://link.springer.com/10.1007/978-981-33-6893-4_19

- ↑ 13.0 13.1 Rehman, Khawaja Ubaid ur; Ahmad, Umair; Mahmood, Sajid (30 March 2018). "A Comparative Analysis of Traditional and Cloud Data Warehouse". VAWKUM Transactions on Computer Sciences 15 (1): 34. doi:10.21015/vtcs.v15i1.487. ISSN 2308-8168. https://vfast.org/journals/index.php/VTCS/article/view/487.

- ↑ 14.0 14.1 Devlin, B. A.; Murphy, P. T. (1988). "An architecture for a business and information system". IBM Systems Journal 27 (1): 60–80. doi:10.1147/sj.271.0060. ISSN 0018-8670. http://ieeexplore.ieee.org/document/5387658/.

- ↑ Garani, Georgia; Chernov, Andrey; Savvas, Ilias; Butakova, Maria (1 June 2019). "A Data Warehouse Approach for Business Intelligence". 2019 IEEE 28th International Conference on Enabling Technologies: Infrastructure for Collaborative Enterprises (WETICE) (Napoli, Italy: IEEE): 70–75. doi:10.1109/WETICE.2019.00022. ISBN 978-1-7281-0676-2. https://ieeexplore.ieee.org/document/8795395/.

- ↑ 16.0 16.1 Gupta, V.; Singh, J. (2014). "A Review of Data Warehousing and Business Intelligence in different perspective" (PDF). International Journal of Computer Science & Information Technologies 5 (6): 8263–8. ISSN 0975-9646. https://www.ijcsit.com/docs/Volume%205/vol5issue06/ijcsit20140506304.pdf.

- ↑ Sagiroglu, Seref; Sinanc, Duygu (1 May 2013). "Big data: A review". 2013 International Conference on Collaboration Technologies and Systems (CTS): 42–47. doi:10.1109/CTS.2013.6567202. https://ieeexplore.ieee.org/document/6567202/.

- ↑ Moore, M. (7 April 2016). "The 7 V’s of Big Data". Impact.com. https://impact.com/marketing-intelligence/7-vs-big-data/. Retrieved 25 September 2022.

- ↑ Miloslavskaya, Natalia; Tolstoy, Alexander (1 August 2016). "Application of Big Data, Fast Data, and Data Lake Concepts to Information Security Issues". 2016 IEEE 4th International Conference on Future Internet of Things and Cloud Workshops (FiCloudW) (Vienna, Austria: IEEE): 148–153. doi:10.1109/W-FiCloud.2016.41. ISBN 978-1-5090-3946-3. http://ieeexplore.ieee.org/document/7592715/.

- ↑ Giebler, Corinna; Stach, Christoph; Schwarz, Holger; Mitschang, Bernhard (2018). "BRAID - A Hybrid Processing Architecture for Big Data:". Proceedings of the 7th International Conference on Data Science, Technology and Applications (Porto, Portugal: SCITEPRESS - Science and Technology Publications): 294–301. doi:10.5220/0006861802940301. ISBN 978-989-758-318-6. http://www.scitepress.org/DigitalLibrary/Link.aspx?doi=10.5220/0006861802940301.

- ↑ Lin, Jimmy (2017). "The Lambda and the Kappa". IEEE Internet Computing 21 (5): 60–66. doi:10.1109/MIC.2017.3481351. ISSN 1089-7801. http://ieeexplore.ieee.org/document/8039313/.

- ↑ 22.0 22.1 Devlin, B. (20 April 2020). "Thirty Years of Data Warehousing – Part 1". IRM UK connects. https://www.irmconnects.com/thirty-years-of-data-warehousing-part-1/. Retrieved 27 October 2022.

- ↑ Inmon, William H. (2005). Building the data warehouse (4th ed ed.). Indianapolis, Ind: Wiley. ISBN 978-0-7645-9944-6.

- ↑ Chandra, Pravin; Gupta, Manoj K. (1 June 2018). "Comprehensive survey on data warehousing research" (in en). International Journal of Information Technology 10 (2): 217–224. doi:10.1007/s41870-017-0067-y. ISSN 2511-2104. http://link.springer.com/10.1007/s41870-017-0067-y.

- ↑ Simões, D. (October 2010). "Enterprise data warehouses: A conceptual framework for a successful implementation" (PDF). Proceedings of the 2010 Canadian Council for Small Business & Entrepreneurship Annual Conference: 1–19. https://www.researchgate.net/profile/Dora-Simoes/publication/233813118_Enterprise_Data_Warehouses_A_conceptual_framework_for_a_successful_implementation/links/09e4150c654d7e5312000000/Enterprise-Data-Warehouses-A-conceptual-framework-for-a-successful-implementation.pdf.

- ↑ Al-Debei, M.M. (2011). "Data Warehouse as a Backbone for Business Intelligence: Issues and Challenges". European Journal of Economics, Finance and Administrative Sciences 33 (2011): 153–66. http://eacademic.ju.edu.jo/m.aldebei/Lists/Published%20Research/DispForm.aspx?ID=15.

- ↑ Market Research Future (9 December 2021). "Data Warehouse as a Service (DWaaS) Market Predicted to Garner USD 7.69 Billion at a CAGR of 24.5% by 2028 - Report by Market Research Future (MRFR)". yahoo! finance. https://finance.yahoo.com/news/data-warehouse-dwaas-market-predicted-153000649.html. Retrieved 27 October 2022.

- ↑ Chaudhuri, Surajit; Dayal, Umeshwar (1 March 1997). "An overview of data warehousing and OLAP technology" (in en). ACM SIGMOD Record 26 (1): 65–74. doi:10.1145/248603.248616. ISSN 0163-5808. https://dl.acm.org/doi/10.1145/248603.248616.

- ↑ 29.0 29.1 29.2 29.3 Codd, E.F.; Codd, S.B.; Salley, C.T. (1993). "Providing OLAP to User-Analysts: An IT Mandate" (PDF). E. F. Codd & Associates. pp. 1–20. http://www.estgv.ipv.pt/PaginasPessoais/jloureiro/ESI_AID2007_2008/fichas/codd.pdf.

- ↑ Gupta, A. (2020). "The Best Applications of Data Warehousing". DataChannel Blog. DataChannel Technologies. https://www.datachannel.co/blogs/best-applications-of-data-warehousing. Retrieved 27 October 2022.

- ↑ 31.0 31.1 31.2 31.3 Hai, Rihan; Koutras, Christos; Quix, Christoph; Jarke, Matthias (2021). Data Lakes: A Survey of Functions and Systems. doi:10.48550/ARXIV.2106.09592. https://arxiv.org/abs/2106.09592.

- ↑ 32.0 32.1 Zagan, Elisabeta; Danubianu, Mirela (1 May 2020). "Data Lake Approaches: A Survey". 2020 International Conference on Development and Application Systems (DAS) (Suceava, Romania: IEEE): 189–193. doi:10.1109/DAS49615.2020.9108912. ISBN 978-1-7281-6870-8. https://ieeexplore.ieee.org/document/9108912/.

- ↑ 33.0 33.1 33.2 Cherradi, Mohamed; EL Haddadi, Anass (2022), Ben Ahmed, Mohamed; Boudhir, Anouar Abdelhakim; Karaș, İsmail Rakıp et al.., eds., "Data Lakes: A Survey Paper" (in en), Innovations in Smart Cities Applications Volume 5 (Cham: Springer International Publishing) 393: 823–835, doi:10.1007/978-3-030-94191-8_66, ISBN 978-3-030-94190-1, https://link.springer.com/10.1007/978-3-030-94191-8_66. Retrieved 2023-05-02

- ↑ 34.0 34.1 Dixon, J. (14 October 2010). "Pentaho, Hadoop, and Data Lakes". James Dixon's Blog. https://jamesdixon.wordpress.com/2010/10/14/pentaho-hadoop-and-data-lakes/. Retrieved 27 October 2022.

- ↑ King, T. (3 March 2016). "The Emergence of Data Lake: Pros and Cons". Solutions Review. https://solutionsreview.com/data-integration/the-emergence-of-data-lake-pros-and-cons/. Retrieved 27 October 2022.

- ↑ Alrehamy, Hassan; Walker, Coral (2015) (in en). Personal Data Lake With Data Gravity Pull. doi:10.13140/RG.2.1.2817.8641. http://rgdoi.net/10.13140/RG.2.1.2817.8641.